And what actually moves the needle

If your organization is trying to "figure out AI" and it feels like you're spinning your wheels - you're not alone. And honestly? It's not your fault.

Most organizations are approaching AI adoption with the same playbook they've used for every other technology rollout. The problem is, AI isn't like other technology. The old playbook doesn't work here.

I've talked with numerous teams over the past year who are stuck. Not because they're not trying - they're trying hard. But the approaches that seem sensible on paper keep falling flat in practice.

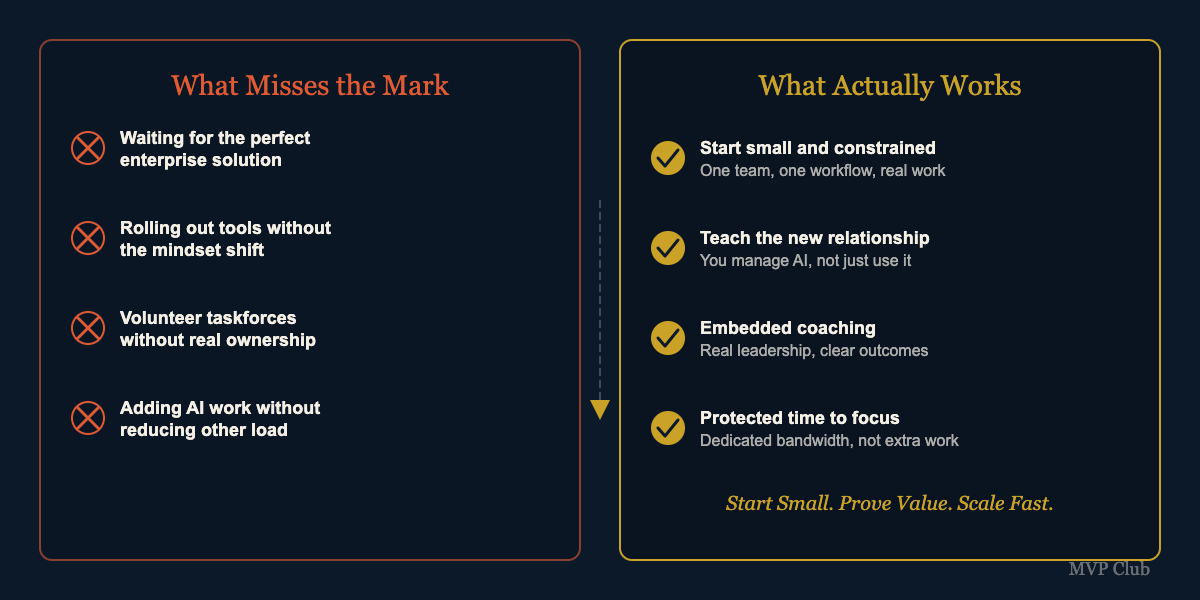

Here are the four patterns I see most often, why they don't work the way you'd hope, and what actually does.

1. Waiting for the Perfect Enterprise Solution

This one makes total sense on the surface. You want to do AI "right." You want governance, security, compliance, a platform everyone can use. So you kick off an enterprise-wide evaluation. You loop in IT, legal, procurement. You build a committee.

And then... months pass.

The wheels of bureaucracy turn slowly. Meanwhile, the tools keep evolving. By the time you've selected and deployed your "perfect" solution, it's already a generation behind - and your people still haven't built the muscle to use it well.

Here's the thing: AI adoption isn't primarily a technology problem. It's a behavior change problem. You can't solve behavior change by selecting the right vendor.

What works instead: Start small and constrained. Pick one team, one workflow, one tool. Get people actually using AI in their real work - with appropriate guardrails - while the enterprise solution gets sorted out. You'll learn more in four weeks of actual practice than in four months of evaluation.

2. Rolling Out AI Without the Mindset Shift

Most people's first experience with AI looks something like this: they open ChatGPT, ask it a question like they're Googling something, get a mediocre answer, and walk away thinking "I don't get what the hype is about."

That's not a shortcoming of the tool. It's a gap in how people are being introduced to it.

AI isn't a search engine. It's not a calculator. It's closer to a new working relationship - one where you're the manager, setting context and goals, evaluating outputs, and providing direction while AI executes in the details.

This is a fundamentally different way of working, and it requires a mindset shift that most organizations never explicitly teach. Without it, people default to "help me spruce up this email" - which is fine, but it's maybe 5% of what's possible.

What works instead: Before you roll out tools, help people understand the new relationship they're building. The skill isn't "how to prompt" - it's learning to trust, delegate, iterate, and let go. That's not something you pick up from a lunch-and-learn.

3. Creating a Volunteer AI Taskforce (Without Teeth)

Another reasonable-sounding approach: pull together enthusiastic people from across the organization to figure out AI together. Form a taskforce. Meet every couple weeks. Share learnings.

In theory, this creates cross-functional collaboration and distributed ownership. In practice, it usually stalls.

Why? Because everyone on the taskforce still has their regular job. There's no dedicated leader with real authority. There's no clear mission or deliverables. People come to meetings, share what they've been experimenting with, and then nothing happens in between because everyone's too busy with their actual responsibilities.

A taskforce can work - but only if it's set up with real leadership, a specific mandate, and protected time for members to actually execute.

What works instead: If you're going to form a group, give it teeth. Assign a dedicated owner. Define a specific outcome (not "explore AI opportunities" but "implement 3 workflows in the next 60 days"). And critically - reduce other responsibilities so people can actually focus.

4. Asking People to Figure It Out on Top of Everything Else

This might be the most common pattern, and it's the most insidious.

Someone gets tapped to "help the team figure out AI." Maybe they're enthusiastic about it. Maybe they were voluntold. Either way, they're now responsible for learning AI, experimenting with tools, developing recommendations, training colleagues...

...on top of their existing 100% workload.

So now they're working 105-110% of their previous hours, fragmented across more responsibilities, with no clear success metrics. The AI work becomes the thing that gets deprioritized when deadlines hit. Progress is slow. Shared understanding never develops. And everyone ends up frustrated.

What works instead: Treat AI adoption like the strategic priority it is. If you want someone to lead this work, reduce their other load. Create protected time. Define what success looks like. Otherwise, you're setting people up to fail - and that's not fair to them or the organization.

What Actually Moves the Needle

All four of these approaches share a common thread: they treat AI adoption as something that can happen passively, in the margins, without fundamentally changing how work gets done.

It can't.

AI adoption is a practiced skill. It requires consistent reps, feedback loops, and - honestly - some discomfort as you learn a new way of working. You can't watch a demo and go. You can't read a playbook and be ready. The skill is in the iteration.

What we've seen work is the opposite of the slow, diffuse, everyone-figure-it-out-on-your-own approach:

Start small. Prove value. Scale fast.

That means: - Pick one team, not the whole org - Identify 2-3 high-value workflows to transform (not dozens) - Work in a focused sprint with real coaching (not self-guided exploration) - Measure actual productivity gains (not vibes) - Build from proof points, not projections

When teams work this way, they don't just learn AI - they build compounding assets. Workflows they can reuse. Prompt libraries tailored to their work. Documented expertise that makes the next workflow easier to build.

A Different Approach: The 4-Week Productivity Sprint

This is exactly why we built the 4-Week Productivity Sprint.

It's designed to install AI workflows into your team's real processes - without training programs, strategy cycles, or major time commitments. In four weeks, you get measurable productivity gains by doing actual work, not sitting through programs.

Week 1: Workflow Discovery - Identify 2-3 high-value use cases - Map current processes - Define success metrics

Weeks 2-3: Build + Install - Create tailored AI workflows - Install into real team processes - Train by doing actual work

Week 4: Measure + Expand - Track impact on cycle time, output, workload - Identify next workflows to automate - Design scale plan (optional)

By the end, your team has 2-3 production-ready AI workflows, role-specific prompt libraries, Loom walkthroughs for each workflow, and - most importantly - measurable productivity gains you can point to.

That's proof. And proof is what lets you scale.

Ready to Stop Spinning Your Wheels?

If any of this resonated - if you've been trying to crack the AI adoption code and it's not clicking - let's talk.

Or, if you're an individual practitioner who wants to build your AI skills through consistent practice, join our community at MVP Club. We work in sprints, share what's working, and learn together.

Either way - you don't have to figure this out alone.

Jill Ozovek is co-founder of MVP Club, where she helps professionals and teams adopt AI through practice-based coaching. She's an ICF-certified coach with 15 years of experience helping people navigate career transitions - and she believes AI adoption is the most important skill shift of our careers.